The Codurance London office came alive last Saturday, 26th April. We were hosting an AI Hackathon, bringing people together specifically to explore the rapidly evolving landscape of AI code assistance tooling.

We brought together a fantastic group of senior software developers from various organisations, all keen to get hands-on and explore what these tools really mean for our craft. The goal was to critically assess their impact on software development: the good, the bad, and the surprising.

The format was simple: attendees were divided into two groups and asked to work in pairs to solve code challenges. One group was initially asked to solve a challenge using AI code assistance tooling whilst the other had to rely on traditional methods. This was then flipped around later in the day meaning everyone had the chance to solve a challenge both with and without AI code assistance tooling. This comparison was key to the day. Developers used a range of AI tools including ChatGPT, Github Copilot, Cursor, Amazon Q, Roo Code and JetBrains' latest offering, Junie.

At various points during the day, we would bring all of the attendees together to share their specific experiences and insights with the wider group for discussion.

The challenges themselves were designed to mimic a common pattern for software applications. There was a need to store and retrieve data, surface this data through a number of APIs and build a user-friendly frontend that would consume those APIs.

While the explicit focus was on the AI tooling, watching seasoned developers huddled together in groups around a monitor, discussing ideas, challenging assumptions, and sharing breakthroughs was a powerful reminder of the collaborative energy that fuels so much innovation in our field. This is something I had personally not seen at this scale since the pandemic.

We are still analysing the outputs from the day and will share a more detailed analysis of the findings soon. However, even from the immediate observations during the day, some clear themes emerged that are worth sharing here:

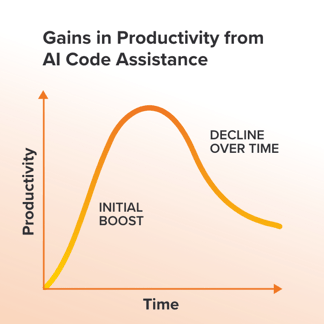

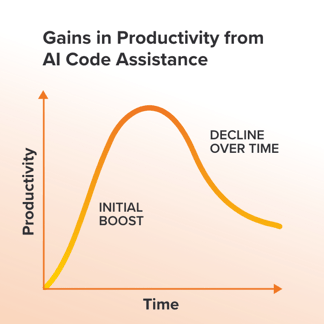

The Initial Rocket Boost (and the Subsequent Slowdown)

There's no denying it, all of the developers reported that getting started on a problem with AI assistance felt significantly faster. The overhead of writing boilerplate code vanished, initial project structures appeared almost instantly, and progress felt incredibly rapid. However, this initial velocity often seemed to plateau sharply. As the complexity grew and the need for nuanced changes or refactoring increased, integrating those changes smoothly with the AI-generated codebase became noticeably more challenging. The effort required to guide the tool towards the right specific change seemed to increase non-linearly.

Prompt Engineering Matters

It became apparent that interacting effectively with these tools is a skill in itself. High-level, vague requests left the specifics of the implementation up to the AI tooling, which at times produced unhelpful results. Clear, specific, context-rich prompts are essential to guide the AI tool effectively. "Prompt Engineering" might feel like another buzzword, but the underlying principle of communicating intent clearly and precisely is fundamental. Treating the AI tool less like a magic wand and more like an incredibly fast, slightly literal-minded junior partner would seem to yield better results.

Holding the Reins

Perhaps the most subtle, yet crucial, observation was how easy it could be to inadvertently cede control. When the AI is generating plausible-looking code quickly, the temptation is strong to just accept it and move on. However, this requires real discipline. Without careful review, critical thinking, and a firm hand guiding the overall direction, the AI could easily start driving the development process, potentially leading down paths that weren't strategically sound or easy to maintain. Keeping the developer firmly in the architect's seat, using the AI as a powerful assistant rather than the lead, is critical.

Unnecessary Collateral Changes

We frequently observed the tooling making broader changes than required for the specific task at hand. Instead of a targeted modification, it would sometimes refactor or alter adjacent code unnecessarily. This 'blast radius', however small, made it harder to reason confidently about its isolated impact. This has the potential to complicate code reviews and debugging efforts.

Struggles with Abstraction

A noticeable limitation was the tools' apparent difficulty in recognising and leveraging existing abstractions within the codebase. Rather than identifying a pattern and reusing an existing function or component, the default behaviour often leaned towards generating duplicated or near-duplicated code snippets. This tendency flies in the face of the DRY (Don't Repeat Yourself) principle and raises clear concerns about creating future maintenance burdens.

Investing in the Tools

Ultimately, despite the hype, these AI assistants are fundamentally tools. Like mastering a complex IDE, a new language feature, or a testing framework, unlocking their true potential requires a conscious investment in learning how to wield them effectively. Simply having access doesn't automatically translate to significant productivity gains or better code; skilful application comes with practice and a deliberate effort to understand their capabilities and limitations.

Closing Thoughts

Beyond the specific insights about the tools themselves, the energy of collaborative, in-person problem-solving was a fantastic takeaway in its own right. We'll be back with more detailed findings soon. For now, it's clear that AI tooling offers intriguing potential, but like any powerful tool, it requires skill, judgement, and a mindful approach to wield effectively.

In the meantime, if you'd like to learn more about AI and what this could mean for software development, read our latest GenAI e-book today.