- By Dave Bage

- ·

- Posted 15 Aug 2023

A visitor (pattern) came knocking

So I opened the door and this person wanted to provide me with a service. I told him “Look, I moved in here in 2021, its taken me ages to get..

Welcome pythonistas!

In our last video session on design patterns we focused exclusively on patterns for the object oriented paradigm (OOP). To some degree this was a meaningful starting point because the famous gang of four book was only about this paradigm.

Later, during a podcast chat about patters with Hibai Unzueta and Jose Huerta, Hibai explained his perspective on programming from a more functional paradigm.

For those of you who do not know about this paradigm I recommend you the HOW TO section on the topic from the Standard Library.

In general words, the functional programming paradigm focuses on the concatenation of pure functions to achieve a desired output by promoting immutability on the data and no side effects during the process. So no objects, no classes, just functions. The topic itself is more deep than this, so you can learn more about it here.

Does this mean that just by this shift of paradigm there are no similar problems that could be solved in a regular way, i.e. that there is no need for design patterns? It depends on your vision, but I would say that of course there is still room for patterns. There are still problems that happen in several contexts and could be solved applying the same "solution" or pattern.

I am not a pure functional programmer. I am closer to OOP in my daily life but there are situations in which a functional approach could help to describe the code and simplify the task for the whole team. I mention this because it does not matter if you are pure functional or pure OOP or pure procedural, the patterns that we are going to review here could be useful for you in all the paradigms, but in functional probably they will be a must.

Here we are going to review the next functional dosing patterns:

When one has a function that will produce the same output based on the same inputs it is likely that one wants to have a way to cache the output so you can perform faster.

The problem

Imagine the situation in which we have an expensive function that is called many times with similar arguments at different stages of our program. Ok, I agree, this is really artificial but I prefer to show you this example rather than the classical Fibonacci series example (multiple examples out there).

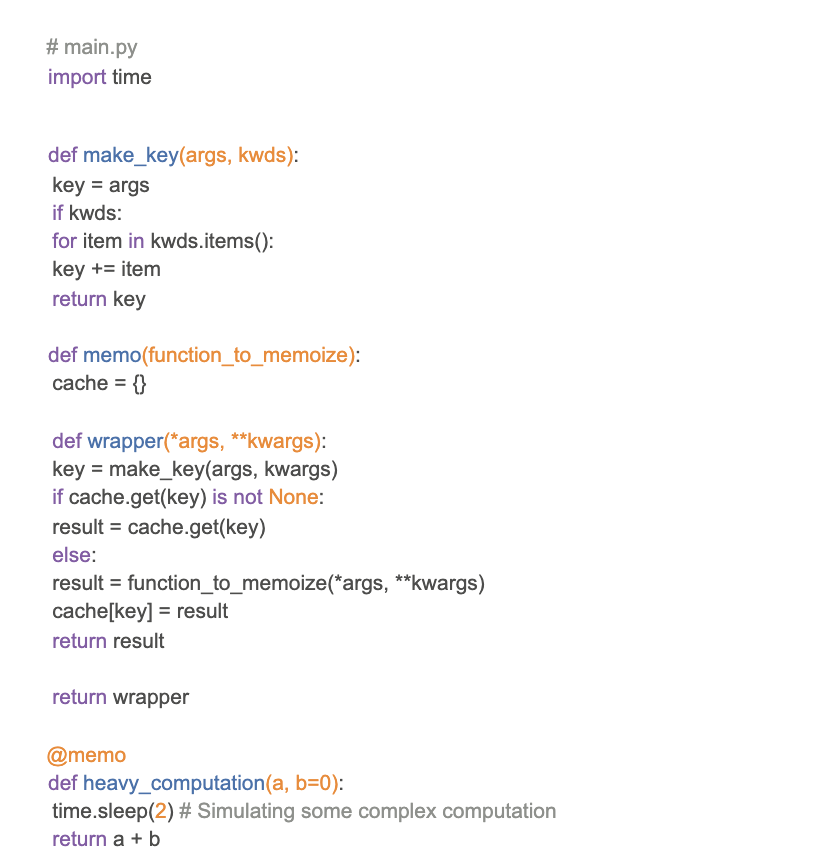

We have the code below:

If we run the script main.py we can check that the program will keep asking us numbers to add (for simplicity I will not check the inputs neither types) until we press N when we are asked if we want to continue. You might notice that heavy_computation takes some time despite always the same output given a couple of inputs.

The pattern

Memoization pattern comes to the rescue. If we are able to keep a part of the memory for caching the different calls, we will run much faster after the first call with given inputs.

One proposal could be this:

The approach in this case was to use another function memo that contains a dictionary to keep in memory the cached results. If the inputs were not seen before, the result of the heavy_computation is obtained and stored in that dictionary using the inputs as key. If the inputs have been used before we can retrieve the result of the computation from the dictionary by using them as a key. We have clearly improved the performance.

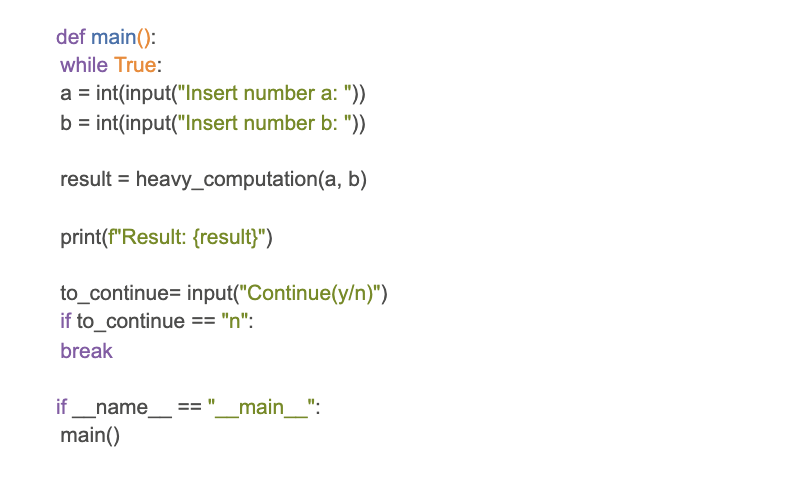

In the standard library we already have an equivalent (much better though!) solution within the functools package. The function lru_cache used as a decorator provides cache for hashable inputs and allows setting a size of the cache and whether the type of the inputs will be taken in account.

The limitations on this library can be overcome with the python-memoization package. I recommend taking a look at it to unfold all its potential. But if you are thinking about memoizing some numpy scientific computations it is likely the place to go.

In the functional programming paradigm usually we work with sequences on top of which we do not mutate anything but rather we apply some transformation to create new data. This transformation is the result of a set of functions applied in a chain to get the final result. Hence it makes sense that if the elements of the sequence are independent, the transformations could be applied in an independent manner and hence it will be possible to keep in memory just the data for the current element of the sequence.

The problem

Imagine the situation in which one has a large data set (>16GB) in a file that should be processed, let say a csv People_data.csv containing data about users. Something like the data below

Jeremy Horton,11-11-1981,+44113 496 0583,15732,2007,04:02:13

Jose Richardson,25-04-1989,(029) 2018 0916,15124,1980,09:53:55 Megan Miranda,21-10-1970,(0114) 496 0848,85295,1973,12:22:35

Kelly Watson,01-04-1983,+44141 4960378,67218,1978,01:09:27

Barry Schultz,28-05-1984,(0141) 4960653,52697,2005,11:25:39

Matthew Bailey,29-11-1997,0118 496 0721,40504,1977,10:38:48

Frank King,04-09-1995,01134960736,52205,1997,01:09:04

Veronica Cooper,28-02-1996,+44(0)20 74960686,72176,1990,19:26:06 David Nixon,12-08-1972,(01632) 960757,31890,1980,11:31:44

Stacey Chambers,23-08-1974,+44117 4960716,50237,1988,19:40:54

We want to have a histogram on the first name of the dataset, that is, count the frequency of a name in the given dataset.

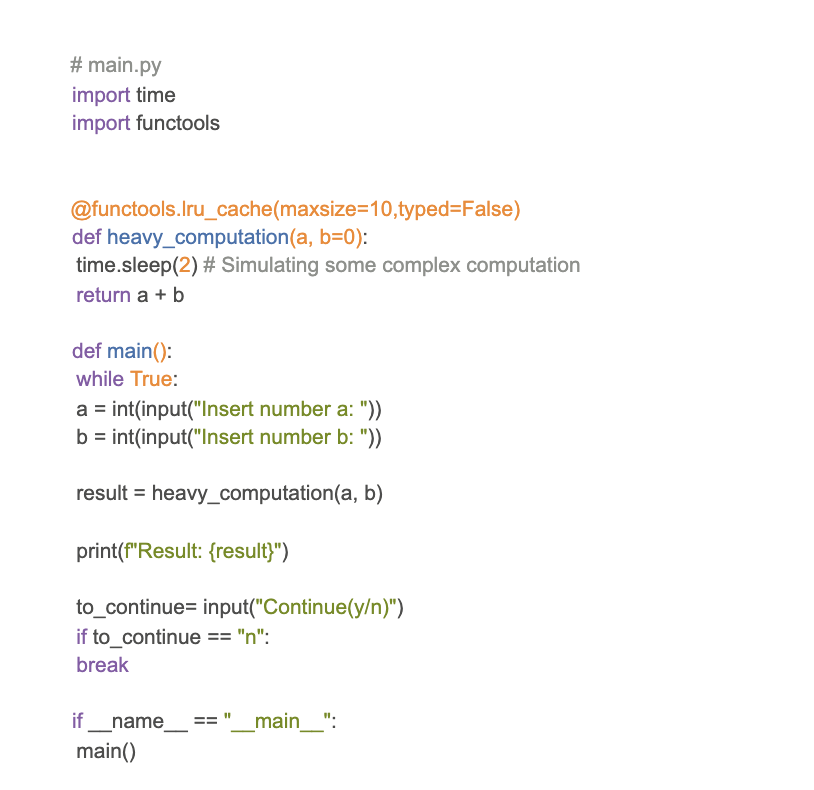

The first approach as an simple example could be:

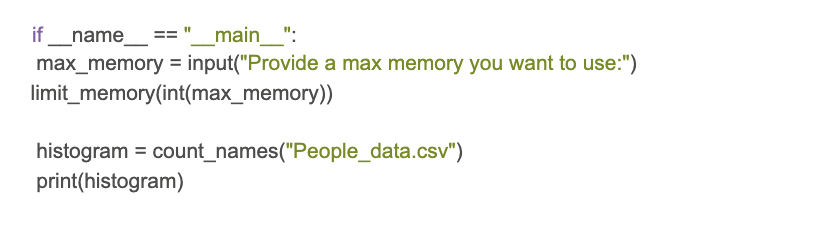

You will notice that we are opening a file and loading the whole content into memory, into the variable in_memory. If we limit the max memory with the limit_memory function, we will get an exception that will look like:

Provide a max memory you want to use:10

Traceback (most recent call last):

File

"/home/javier/Projects/functional_patterns/./src/lazy_sequence/problem.py", line 27, in <module>

histogram = count_names("People_data.csv")

File

"/home/javier/Projects/functional_patterns/./src/lazy_sequence/problem.py", line 10, in count_names

in_memory = fid.read().split("\n")

MemoryError

As you might notice if we are handling small files this is ok, but what if you have to deal with a large data set?

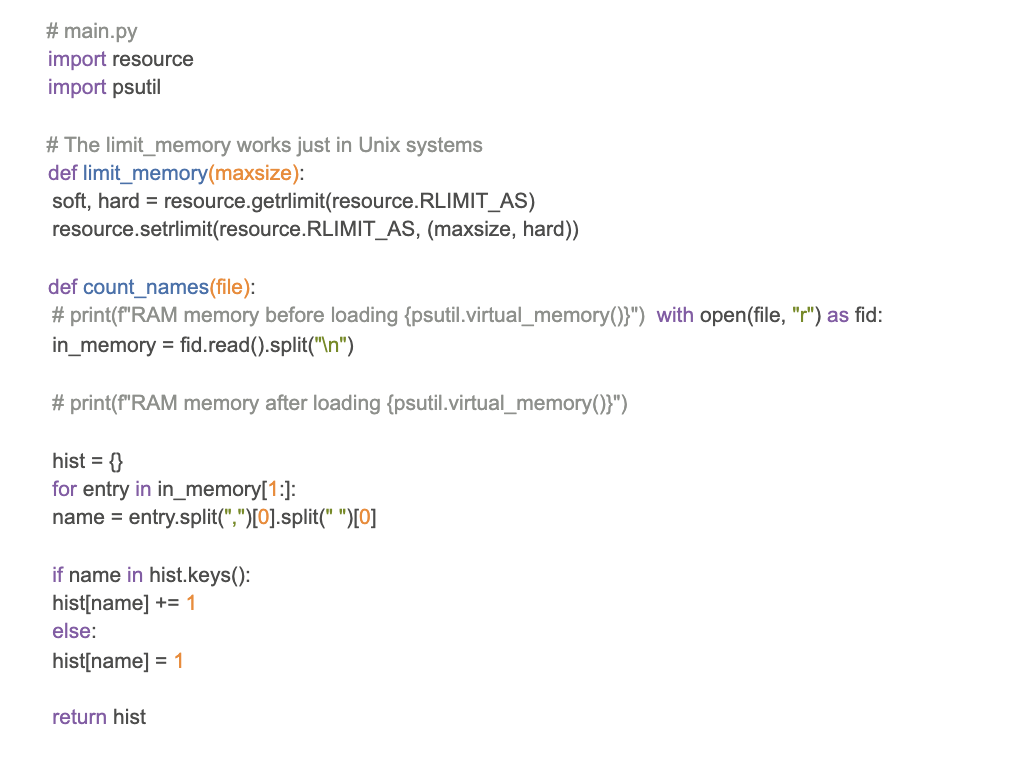

The pattern

The solution for these kinds of limitations comes with lazy loading. Laziness in this case means that there will be no load of data until that data is needed for an operation. For our case this means that we could read line by line of the file, having just one line in memory while it is processed.

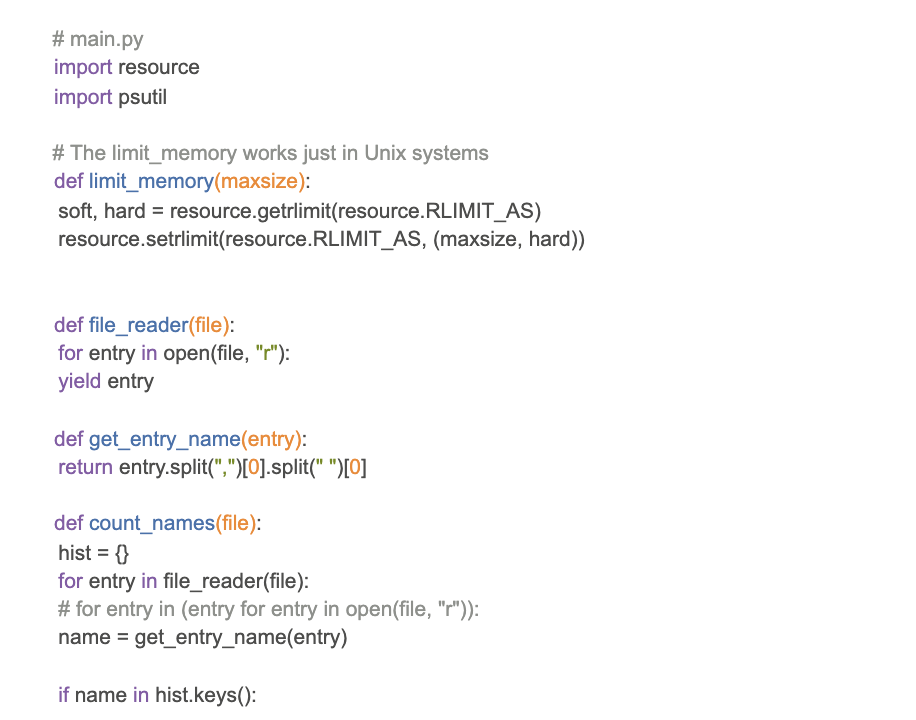

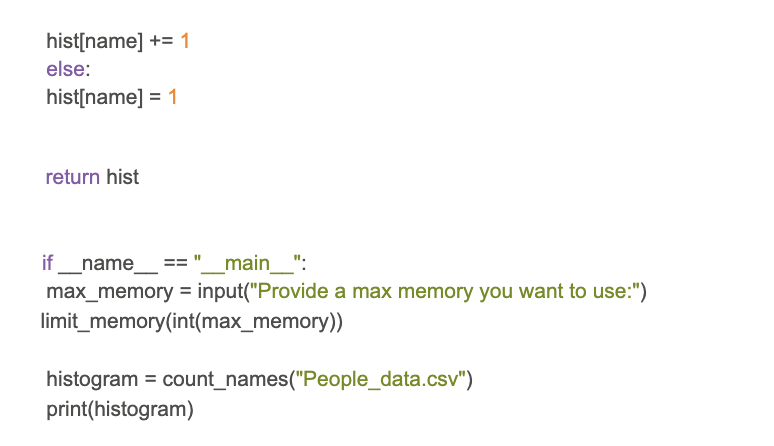

The code will look like:

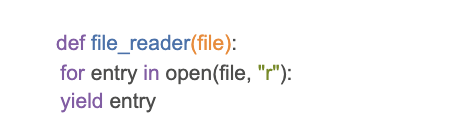

The key of the pattern are the lines:

This is called generator or generator function. Its characteristic is the yield keyword. The effect of this command is to return the value, but it does not exit the function. It signals to the Python interpreter that the state of the function should be preserved for a further call. In our case this means that we are reading just one entry of the dataset and returning it, while keeping the state of the for loop that is iterating throughout the field.

One alternative is to replace the call to this generator function in the for loop by a generator expression. The example with it is commented on in the code above. Its result is similar to the generator function.

There are cases when the behaviour of your function during a specific part of the runtime will receive the same input. This is the case when injecting configurations. In those cases we would like to initialise the function, just partially, so we do not need to pass the configuration each time we call the function.

The problem

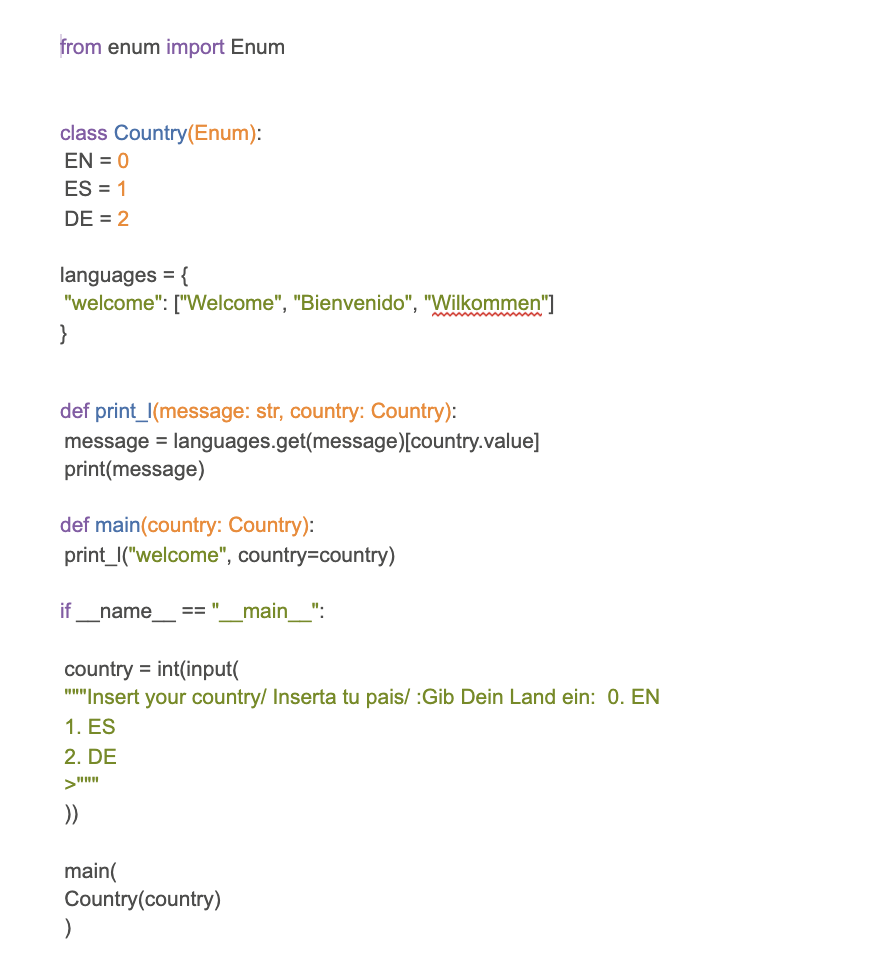

Imagine that depending on the choice of the user your application has to print in a different language. The code will look like:

The key part is the function print_l. Based on the country that it receives it will print at the console a different language. If we want to use it we will need to always pass the country parameter. In this case it is not critical because we are using a simple case and just one line.

Imagine a main function with different occurrences and that the country parameter of the function needs to be renamed. This will affect any single invocation throughout your code. So increasing the maintainability and decreasing readability.

The pattern

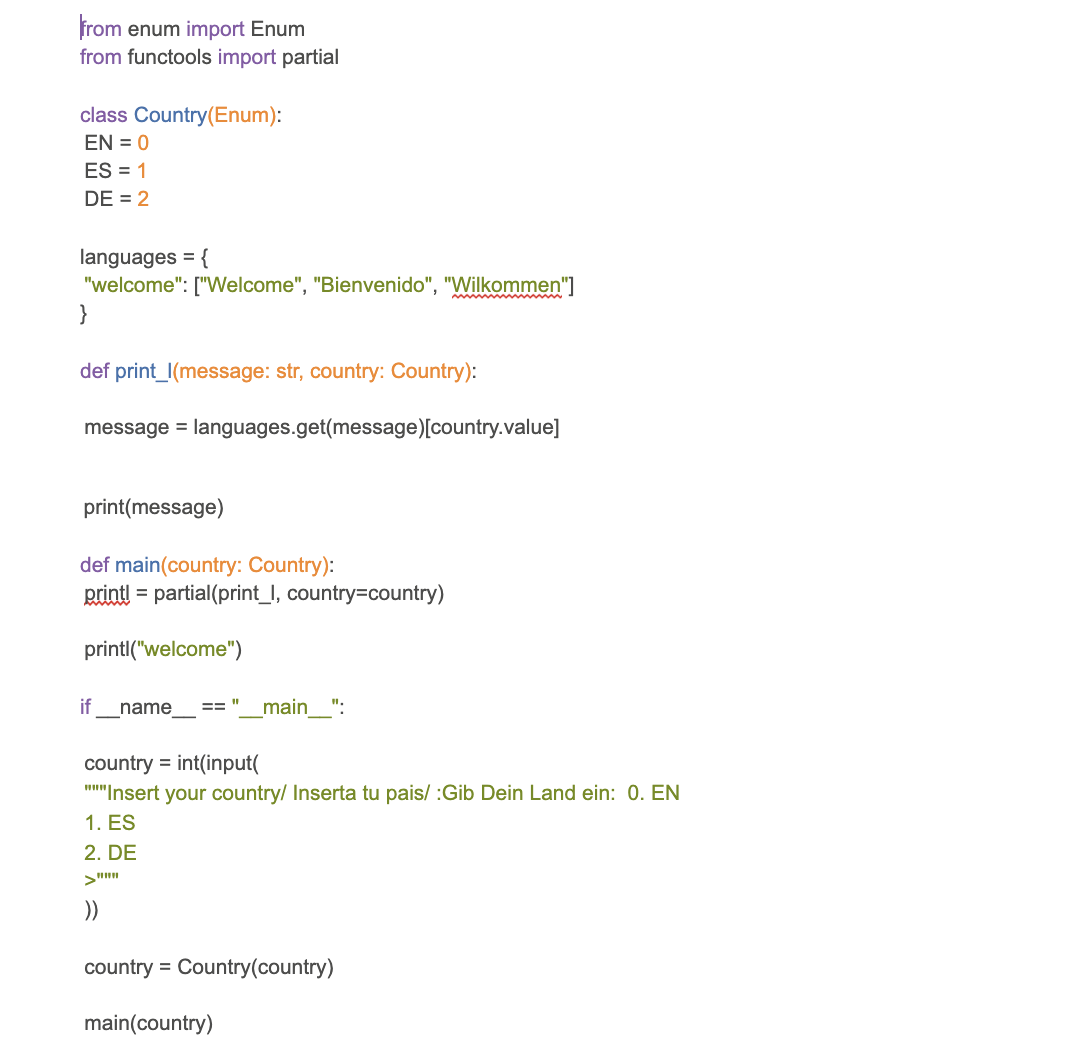

In this case what we can do is to invoke partially, i.e. just with the country parameter and then use this partial function across our code, saving time and increasing the quality.

The code will look like:

As you can see the standard library has a package called functools that we mentioned above. In this case we are using the partial method. This will receive the function that needs to be partially initialized and the parameters that we will use for that initialization. As you can see, in this case it is just “country”.

The function returns our partial function that later on can be invoked with just the remaining parameters.

There is not extensive and deep literature on patterns within the functional programming paradigm. Despite this, programmers found errors/problems that could be solved in the same way across a variety of situations. So they find patterns of errors and develop strategies to solve them, so they develop design patterns.

We have proved this point with the previous three patterns. The examples and code are just for grasping the idea. I invite you to go beyond and see how these patterns could be integrated in your daily practices.

In the next article we will cover more topics related to patterns and architecture...until then, remember to be brave and bold!

If you´d like this article then please check out the one below! :)

So I opened the door and this person wanted to provide me with a service. I told him “Look, I moved in here in 2021, its taken me ages to get..

In episode 36 of Codurance Talks, our UK North Regional Director, Kirsten Osborn and Engagement Manager, Simon Shaw sit down with Jonathan Smart to..

"Smart" Fridges are in. And FridgeCraft want to adopt this trend with their new test model. Our Smart Fridge kata will test your coding skills to not..

Join our newsletter for expert tips and inspirational case studies

Join our newsletter for expert tips and inspirational case studies