Handling tightly coupled applications

The House of Commons Public Accounts Committee has highlighted the pressure that legacy systems have placed on the government.

"Such systems are widespread across government, but can be unreliable, hard to support, and frustrate efforts to modernise services. This situation is not helped by the scarcity of specialist digital, data and technology skills…"1

Legacy systems in the public sector

Legacy systems can be defined as:

- Applications without automated tests2.

No matter which technologies they are written or how old or new they are, an application without a meaningful set of automated tests is an application that will evolve with difficulties. The lack of meaningful test coverage will drastically reduce confidence in making changes, and this will be more evident as the application becomes older: the application will still do those things for which it was created, but it cannot evolve. An application implemented without a meaningful set of automated tests brings tech debt from the start of its creation.34

- Systems that are outdated but still in use.

These systems can be based on products that cannot be updated because the vendor has ended the maintenance period, or the update licence was discontinued by the client, and the update to a new major version is too difficult to put in place.

The UK point of view about legacy systems;

In addition;

- The Government Digital Service stated that public companies should move to the cloud6. The GDS says: “This approach is generally more flexible, current, cheaper and quicker to deploy than using bespoke services over dedicated networks”

- Cloud migrations need specialised support to be successful.

The risks brought from legacy systems

The risks of procrastinating the modernisation of legacy systems range from loss of data, and outages to dramatic consequences (think of failures in critical sectors like hospitals or transportation - train routing system errors).

Modernisation (and migration) to get rid of the threat of legacy systems

The company system modernisation and migration must be driven by a well-defined strategy, and the experience of a specialised partner is key to avoiding failure. Read more about modernisation strategy in our new eBook: Crafting a Cloud Migration and Modernisation Strategy for the Public Sector.

The key concept to keep in mind to embrace modernisation is that the process can be progressive, tackling one piece of the system at a time.

An example of legacy systems and modernisation: the high coupling problem created by having a shared database, and its solution

This article is not exhaustive on the migration process, but shows in a simplified way how a single common problem can be managed.

There is a big limitation that affects many IT legacy stacks in the public sector: sometimes systems are tightly coupled, and this article talks specifically about the problem and the solution of database schema-sharing.

The database schema is a logical partition created inside an RDBMS database server (single instance or cluster); the schema contains tables, constraints, stored procedures and everything related to the schema.

There is no problem in sharing an on-premise database server between two or more applications. The application data in this database server must be segregated in database schemas to be accessed using different database users.

This article focuses on database schema sharing and is not meant to evaluate when a database server should or should not be shared between different applications.

For the purpose of this article, the terms "database" and "schema" are synonyms of "RDBMS schema" from here on.

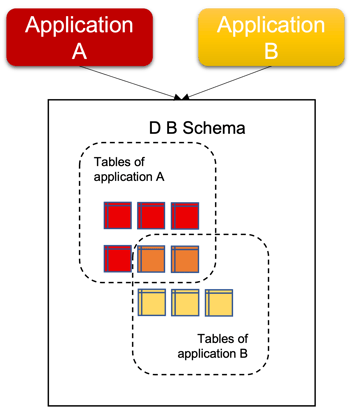

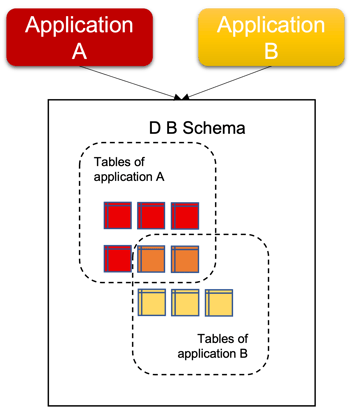

The easiest form of database sharing to think of, is the one in which two applications share the same schema: the applications have their tables in the same database schema, and some of these tables may be used by both applications. In this scenario, two applications use the same schema as their main one.

This picture shows the above scenario, with an overlap between the sets of applications’ tables:

There is another form of database sharing: legacy RDBMS were used as final endpoints for integration between two or more applications. In this scenario, application A has its main database and has a dependency on the data of application B; the data of application B are accessed by application A, not by specific services exposed by application B (e.g.: REST API, SOAP web services) but in one of these ways:

- Database direct access: this is done by allowing a third application to access the tables in the schema of another application.

- Database links: these are shortcuts between schemas and/or remote databases, and are supported by commercial and open-source enterprise RDBMSs (e.g.: Oracle, PostgreSQL).

Apart from database sharing, a more common anti-pattern is the store of business logic inside the database, the so-called “stored procedures”. Executing data operations as nearest as possible to the data could be useful, but there is the risk of lock-in: extracting business logic from the database frees the companies from this specific lock-in, allowing database switch (e.g.: from commercial to open-source), re-platforming (e.g.: from on-premise to a cloud offer) and refactoring (e.g.: from RDBMS to NoSQL).

Together with the stored procedures we can mention the database triggers, which call stored procedures consequently to an event. A write operation (insert/update/delete) on a schema could trigger a stored procedure that produces more changes than the ones on the records identified by the table and "where" clauses specified by the query.

A developer’s team can use a dblink to do a write operation on a database of another application, without caring about eventual triggers, creating entropy. These problems can be mitigated with specific user permission configurations at db level, but the best practice to apply is segregation: this leads to loosely coupled applications. Later in this article it will be shown how to get rid of dblinks.

Another possible drawback of sharing a database between two or more applications (directly or with dblinks) is the impossibility for an application to evolve, because the data model (tables and referential integrity statements like foreign keys) are shared with another application that maybe can't be modified for different reasons. If one application needs to evolve modifying the database schema and the other application can not be consequently updated, the evolution procedure is stuck.

It’s worth noting that a database shared between multiple applications will execute queries coming from all of these applications: if one of these queries bursts the CPU or locks some resources, then there will be consequences also for the other applications.

Applications can share the data in their database with database-sharing in a very simple way, but the price to pay is the high coupling: after years (or decades) of direct accesses and dblinks, it is difficult to update the schema to support new features, it is difficult to update the technology stack and is even harder to move the stack to the cloud.

In Part Two we will look at the steps required to decouple applications sharing a common database schema. In the meantime, why not download a copy of our new eBook which focuses on cloud migration and modernisation strategies in the Public Sector. Download your copy here.

Footnotes

- Challenges in implementing digital change: Committee of Public Accounts December 2021

- Sandro Mancuso, Working with legacy code

- TechTarget, definition of legacy system (legacy application)

- Talend, What is a Legacy System?

- House of Commons Committee of Public Accounts - Challenges in implementing digital change

- UK Gov, Central Digital and Data Office - Moving to modern network solutions