- By Emmanuel Valverde

- ·

- Posted 22 Mar 2024

Advanced Katas

You have reached the highest level. You have completed the learning phases and we move on to the more complex katas. Remember that in the link of..

This blog is part of a series of posts around TDD anti patterns. The first of this series covered: the liar, excessive setup, the giant and slow poke - as you might have guessed, in the industry, we usually talk about how things should be done, but sometimes we forget how we can learn from what makes testing harder.

This blog is the second in the series where we will focus on four more anti-patterns, named: The mockery, The inspector, The generous leftovers and The local hero. Each one focuses on a specific aspect of code that makes testing harder. Potentially, one of the root causes that companies argue they don't have the required time to build solutions guided by tests.

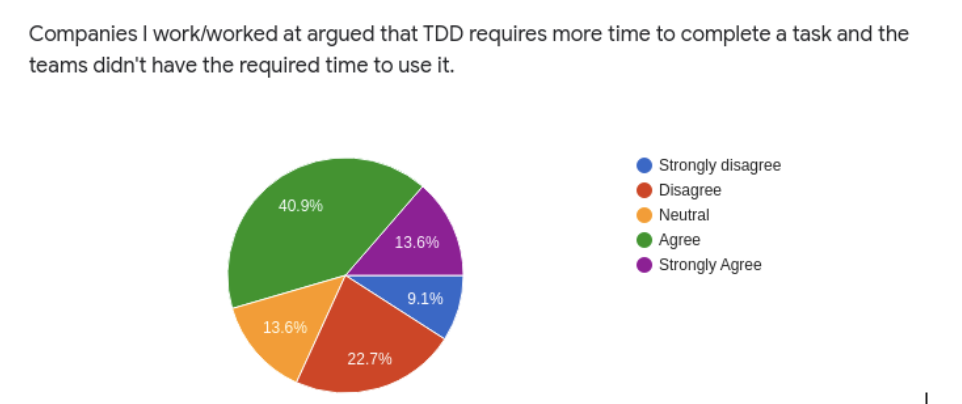

More than 50% of survey respondents agreed that in companies they have worked for, TDD requires more time to finish a task.

The mockery is one of the most popular anti pattern across developers, as it seems that everyone has had some experience mocking code to test. The first idea, Mocking is simple: avoid external code to focus on what you want to test. Therefore, mocking has its own naming and categories that make it unique and, at the same time, tricky to use correctly.

For example, Martin Fowler and Uncle Bob, classifies the mocks family into different types: dummies, spies, fakes and stubs. Uncle Bob refers to "True mocks" whereas Martin Fowler refers to "Test double". Uncle Bob explains that "mocks" got spread as it is easier to say: "I will mock that", "can you mock this"?

The difference between those are important, we, as developers, have the habit of calling everything a mock, thus misleading sometimes what we want to mean, spring.io goes even further, avoiding the debate about if we should use mocks or not (referring to the Chicago School and the London School of TDD) - I will do the same, this is a discussion that deserves a blog post itself. Martin Fowler also wrote about this very same subject and agrees that for a while he also thought about mocks and stubs being the same.

On the other hand, The mockery refers to the same definition as Uncle Bob, referring to all mocks. To clarify, let's split the anti pattern in two parts, the first, is the excessive number of mocks needed to test a class. As the number of mocks grow, the probability of testing the mock instead of the desired code goes up - it's also related to the Excessive Setup - here we start to see some connection between the anti-patterns.

The second part is, testing the mock instead of its interaction with the code under testing. For example, if we have the following code, that is an interaction with a payment gateway.

/** * Two constructor dependencies, both need to be * mocked in order to test the process method. */class PaymentService( private val userRepository: UserRepository, private val paymentGateway: PaymentGateway) { fun process( user: User, paymentDetails: PaymentDetails ): Boolean { if (userRepository.exists(user)) { return paymentGateway.pay(paymentDetails) } return false }With test:

class TestPaymentService { private val userRepository: UserRepository = mockk() private val paymentGateway: PaymentGateway = mockk() private val paymentService = PaymentService( userRepository, paymentGateway ) @Test fun paymentServiceProcessPaymentForUser() { val user: User = User() every { userRepository.exists(any()) } returns true every { paymentGateway.pay(any()) } returns true // setting up the return for the mock assertTrue(paymentService.process(user, PaymentDetails())) // asserting the mock }}In this example, the paymentGateway.pay, which is stubbed for the test purpose is what is being tested.

Often, the purpose of testing is mixed with "inspection", this time I will focus on the idea of inspecting instead of checking for behaviour (the output).

For example, while testing, it gets a bit trickier as we would like to verify if given an input X we will receive the outcome Y, in the following snippet, there is the method getFilesToWriteRelease and setFilesToWriteRelease.

If we think about the context of the class, why do such methods exist?

<?php/* skipped code */class Assembly{ /* skipped code */ public function __construct( FindVersion $findVersion, FileRepository $fileRepository, string $branchName, FilesToReleaseRepository $filesToReleaseRepository ) { $this->findVersion = $findVersion; $this->fileRepository = $fileRepository; $this->branchName = $branchName; $this->filesToReleaseRepository = $filesToReleaseRepository; } public function getFilesToWriteRelease(): array { return $this->filesToWriteRelease; } public function setFilesToWriteRelease(array $filesToWriteRelease) { $this->filesToWriteRelease = $filesToWriteRelease; return $this; } public function packVersion(): Release { $filesToRelease = $this->getFilesToWriteRelease(); if (count($filesToRelease) === 0) { throw new NoFilesToRelease(); } $files = []; /** @var File $file */ foreach ($filesToRelease as $file) { $files[] = $this->fileRepository->findFile( $this->findVersion->getProjectId(), sprintf('%s%s', $file->getPath(), $file->getName()), $this->branchName ); } $versionToRelease = $this->findVersion->versionToRelease(); $release = new Release(); $release->setProjectId($this->findVersion->getProjectId()); $release->setBranch($this->branchName); $release->setVersion($versionToRelease); $fileUpdater = new FilesUpdater($files, $release, $this->filesToReleaseRepository ); $filesToRelease = $fileUpdater->makeRelease(); $release->setFiles($filesToRelease); return $release; }}In this sense, if we think about Object oriented programming, we talk about invoking methods in objects. Such invocations allow us to interact and receive the result, but, the get/set is breaking the encapsulation just for the sake of testing.

Another possible reason for experience **the inspector** is to start tricking too much to achieve a specific part of the code with reflection. Luckily, a few years back, we had this question asked at stackoverflow. Is it bad having to use reflection? Before proceeding to this question, let's do a quick recap on what is reflection and why is it used in the first place?

Baeldung gives a few examples on why you might need reflection, the main usage of it is to get understanding of a given part of the code, which methods, which properties and act on those. The following example is used on his site:

public class Employee { private Integer id; private String name;}@Testpublic void whenNonPublicField_thenReflectionTestUtilsSetField() { Employee employee = new Employee(); ReflectionTestUtils.setField(employee, "id", 1); assertTrue(employee.getId().equals(1));}Some assumptions in the answers that points to using reflection as a bad practice:

While practising TDD, tasks such as setting up the state in which the test will run is part of the basics. Setting fake data, listeners, authentication, whatever it might be, we define them, because they are crucial for the test, but sometimes we forget to reset the state to be how it was before the test - the xUnit phase in which such preparation happens: "setup, exercise, verify, teardown".

It can cause different issues, and the first one is failing the next test, which was supposed to run without problems, the following list, tries to depict a few scenarios in which it might happen.

If we think about 3, such behaviour will get blurry when testing; for example, using persistent data is a must for end-2-end testing. But, on the other hand, 4 is a common source of errors while testing guided by tests. Often, as the mock is usually used to collect calls in the object (and verify it later), it is common to forget to restore its state.

Codingwithhugo depicts this behaviour in a code snippet using jest, giving the following test case (and assume they are in the same scope):

const mockFn = jest.fn(); // setting up the mockfunction fnUnderTest(args1) { mockFn(args1);}test('Testing once', () => { fnUnderTest('first-call'); expect(mockFn).toHaveBeenCalledWith('first-call'); expect(mockFn).toHaveBeenCalledTimes(1);});test('Testing twice', () => { fnUnderTest('second-call'); expect(mockFn).toHaveBeenCalledWith('second-call'); expect(mockFn).toHaveBeenCalledTimes(1);});The first test that calls the function under test will pass, but the second, will fail. The reason behind is the lack of reset in the mock. The test fails saying that the mockFn was called twice. Getting the flow as it should is as easy as:

test('Testing twice', () => { mockFn.mockClear(); // clears the previous execution fnUnderTest('second-call'); expect(mockFn).toHaveBeenCalledWith('second-call'); expect(mockFn).toHaveBeenCalledTimes(1);});Such behaviour is not easily faced by javascript developers, as the javascript functional scope prevents that out of the box. Anyways, being mindful of these details can make a difference while writing tests.

The TDD anti-patterns precede the container era, which was common to have differences between the machine in which the developer was working on and the server in which the application actually would run - often, as they weren't the same, configuration specific to the developer machine got in the way during the deployment process.

PHP for example, relies heavily on extensions that can or can't be enabled in th server. Extensions such as threads, drivers for connecting to a database and many more.

In this case, if the developer relied on a specific version for a given extension, the test would run successfully, but as soon as we try to run the suite in another machine, it would fail - or in the Continuous Integration server.

Not only that, environment variables can get into the ways as well, for example, the following code depicts a component that needs a url to load the survey (some of the code has been removed/modified intentionally and adapted to fit the example - for more info, follow this github link):

import { Component } from 'react';import Button from '../../buttons/primary/Primary'; import '../../../../scss/shake-horizontal.scss';import './survey.scss'; const config = { surveyUrl: process.env.REACT_APP_SURVEY_URL || '',} const survey = config.surveyUrl; const mapStateToProps = state => ({ user: state.userReducer.user,}); export class Survey extends Component { /* skipped code */ componentDidMount = () => { /* skipped code */} onSurveyLoaded = () => { /* skipped code */} skipSurvey = () => { /* skipped code */} render() { if (this.props.user.uid && survey) { return ( <div className={`w-full ${this.props.className}`}> { this.state.loading && <div className="flex justify-center items-center text-white"> <h1>Loading...</h1> </div> } <iframe src={this.state.surveyUrl} title="survey form" onLoad={this.onSurveyLoaded} /> { !this.state.loading && this.props.skip && <Button className="block mt-5 m-auto" description={this.state.buttonDescription} onClick={this.skipSurvey} /> } </div> ); } return ( <div className="flex justify-center items-center text-white"> <h1 className="shake-horizontal">Something wrong happened</h1> </div> ); }} /* skipped code */import { mount } from 'enzyme';import { Survey } from './Survey';import { auth } from '../../../../pages/login/Auth';import Button from '../../buttons/primary/Primary'; describe('Survey page', () => { test('should show up message when survey url is not defined',() => { const wrapper = mount(<Survey user= />); const text = wrapper.find('h1').text(); expect(text).toEqual('Carregando questionário...'); }); test('should not load survey when user id is missing', () => { const wrapper = mount(<Survey user= />); const text = wrapper.find('h1').text(); expect(text).toEqual('Ocorreu um erro ao carregar o questionário'); }); test('load survey passing user id as a parameter in the query string', () => { const user = { uid: 'uhiuqwqw-k-woqk-wq--qw' }; const wrapper = mount(<Survey user={user} />); const url = wrapper.find('iframe').prop('src'); expect(url.includes(auth.user.uid)).toBe(true); }); test('should not up button when it is loading', () => { const user = { uid: 'uhiuqwqw-k-woqk-wq--qw' }; const wrapper = mount(<Survey user={user} />); expect(wrapper.find(Button).length).toBe(0); }); test('should not up button when skip prop is not set', () => { const user = { uid: 'uhiuqwqw-k-woqk-wq--qw' }; const wrapper = mount(<Survey user={user} />); expect(wrapper.find(Button).length).toBe(0); }); test('show up button when loading is done and skip prop is true', () => { const user = { uid: 'uhiuqwqw-k-woqk-wq--qw' }; const wrapper = mount(<Survey user={user} skip={true} />); wrapper.setState({ loading: false }); expect(wrapper.find(Button).length).toBe(1); });});Despite the code age (long time class components in react), it seems to do the job pretty well. Deriving the behavior from the test cases, we can understand that some loading is going on based on the survey URL and the user id. Unfortunately, the details of the implementation are what matter the most - if we run the test case for the current implementation, it will fail.

Test Suites: 1 failed, 62 passed, 63 total

Tests: 3 failed, 593 passed, 596 total

And the fix for such a run, is to export an environment variable named REACT_APP_SURVEY_URL. Well, the easy fix would be to use the env variable. The long term fix, would be to avoid depending on the external definition and assume some defaults, here are some ideas that come to my mind to fix that properly:

Another example, would be relying on the real files, this issue is also discussed in a stack overflow thread. The issue that comes in such a dependent test, is: the test would run only in a windows machine. Ideally external dependencies should be avoided using test doubles.

In this second episode, we covered four more anti-patterns that get in our way while developing applications guided by tests. When such issues arrive in the code base, it's normal to perceive that tests - or writing tests first - will slow down the delivery of a task if compared to no tests. But, on the other hand, it also might be a double-edged sword, in which not having tests could slow you even more.

The mockery was the most popular anti-pattern, with answers from the survey showing that misusing test doubles (or as we know them, mocks) can be a source of problems. We also saw that reflection could be an issue while testing and resetting state is important to keep the test suite sharp. Finally, we covered the problem in which the test suite only runs on the developers machine (or for today's standard, in the developers docker image?).

All in all, we hope that this sums up to the previous episode and that you will keep your test suite running smoothly

You have reached the highest level. You have completed the learning phases and we move on to the more complex katas. Remember that in the link of..

Our recommendation is that you first go through Object-Oriented Programming katas so that you can move on to more complex testing concepts. If so, we..

Once basic concepts such as conditionals, loops, and simple structures have been introduced and reinforced, and TDD has been strictly practiced, it's..

Join our newsletter for expert tips and inspirational case studies

Join our newsletter for expert tips and inspirational case studies